Google Crawl an Error Has Occurred Please Try Again Later

Want to Support Me?

Get ii costless stocks valued upwards to $1,850 when you open a new Webull investment account through my referral link and fund the account with at least $100!

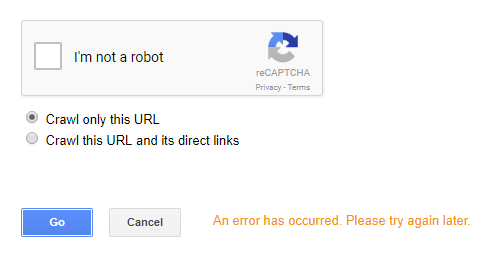

Twice in the concluding few weeks, I submitted multiple links using the Fetch every bit Google tool to make certain certain pages were either added or updated in the Google index, and while doing so, I began receiving the dreaded message "An error has occurred. Please attempt again later."

The Problem

In the by, Google allotted a quota of X number of index requests per month. But plainly, the system has changed, and there is no longer any quota listed when hovering over the ii crawl options:

It seemed similar I was only able to get through about 10 index request submissions before I started getting the mistake message, so I switched to a different account to start over and go along count.

I was able to submit ix index requests earlier I got the "An error occurred" message on the 10th request. A user on a Webmaster Central Help Forum post indicated almost the same limit:

There is a global issue in Google search panel with submitting URLs equally yous have described. Will let you submit 10 URLs (per day) then fault message appears.

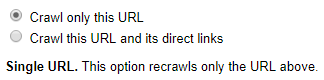

Annotation that you can submit some additional requests using the "Clamber this URL and its direct links" option, even after hitting the limit using the "Crawl only this URL" choice, then there must exist a split limit for both.

I did try switching the clamber device from "Desktop" to "Mobile: Smartphone", but it did not permit me to submit whatever boosted requests.

According to the higher up linked forum post, users pointed out that the Fetch as Google tool was never meant to go a whole bunch of pages indexed by Google. They have all the automated means in the globe to crawl sites and notice new or updated content, and then I can see that. Nevertheless, it's yet nice to immediately give Google knowledge of a change I made and see it updated chop-chop on SERPs.

I judge what that boils downwardly to is that nosotros should never have gotten used to submitting so many links through that tool, if that'southward truly not what Google intended information technology to be.

Solutions

So where does that leave us?

- Y'all can however submit nine-x URLs per 24-hour menses and per crawling option ("Crawl only this URL" or "Crawl this URL and its direct links"), for a total of 18-20 requests per 24-hour interval.

- You can give additional users access to your property, and when they access the Fetch as Google tool, they can submit additional URLs via their Search Panel account.

- If you have new URLs you desire to permit Google know about, make sure they are in your sitemap.xml file and resubmit it to Google.

- If you lot have a bunch of pages on your site that have changed, and you lot desire to let Google know near the changes, you lot can no longer submit the URLs individually, since you lot volition chop-chop hitting the nine-x limit. A mode around this would be to create a separate web page with links to the updated pages, so submit an alphabetize request for that page using the "Crawl this URL and its direct links" option.

Your Plough!

Did you land hither because you've received this error too? What solution did you use to go past this result? Let's talk over below in the comments!

Want to Back up Me?

Go ii free stocks valued up to $1,850 when you open a new Webull investment account through my referral link and fund the business relationship with at least $100!

Source: https://washamdev.com/why-does-fetch-as-google-tool-display-an-error-occurred/

0 Response to "Google Crawl an Error Has Occurred Please Try Again Later"

Post a Comment